Markdown coding

August 5, 2025

As I'm building new tools these days, I'm increasingly writing both specs (upstream of code) and test plans (downstream) in Markdown.

I've written before about writing more READMEs as onboarding context for coding agents, and SpecTree is exploring ways to make Markdown specs more composable and reusable.

Recently, when I talk with other builders who are pushing coding agents like Claude Code, Codex, or Jules to produce more code with higher quality, I'm seeing similar patterns popping up -- we spend our calls swapping tips about naming conventions or folder structures for Markdown files, rarely discussing the implementation details downstream from those decisions.

This isn't "vibe coding" or traditional AI pair programming. It's something different -- we're writing instructions in structured English, and using LLMs implicitly as fuzzy "spec compilers" to translate our intents into working code and tests.

It seems useful to give this style of coding a name, so I'm calling it "Markdown coding".

What's going on here?

Why are builders I talk to all writing more Markdown files and trying to figure out what works?

No one told us to do this. But if you use a tool like Claude Code a while, you will come to a similar realization that context, not capability, is the current bottleneck for both quality and quantity of code it produces.

I don't think anyone is near any sort of efficient frontier yet, as models and harnesses keep evolving underneath us.

Markdown is a natural solution, but the problem we're trying to solve is feeding more high quality context to models that can increasingly follow very detailed instructions for more complex tasks. The models are pulling Markdown out of us, as a way of better explaining what we want them to do.

We're still writing (or least reviewing, maybe skimming?) .py or .go or .tsx files, but those are increasingly downstream of higher-leverage Markdown artifacts that determine how our systems behave or get tested.

This talk from Sean Grove at the last AI Eng World's Fair also captures this idea well, from the perspective of a foundation lab trying to specify the desired behavior of a very complex model (as a model card, written in Markdown on Github).

So why are so many different threads converging on Markdown?

Why Markdown?

John Gruber invented Markdown in 2004 (with help from Aaron Swartz).

It was an elegant, minimally structured form of plain text -- universal, tool agnostic, readable by both humans and computers.

Initially, it was a tool for writing content like blogs or documentation. There are now many static site generators (such as Jekyll or Github Pages) that let you author content in Markdown and render it to various output formats (like HTML or RSS).

You can think of these as Markdown-to-website "compilers". Naturally, this blog post is itself written in Markdown, letting me focus entirely on what I want to say, not details like CSS classes, typography, or mobile responsiveness (still important, but downstream).

Markdown then spread from blogs to become the de facto standard for all engineering documentation. README.txt files were upgraded to README.md.

Coding platforms like GitHub or GitLab adopted Markdown as their native format for documentation, issues, PRs, and comments. Many technical documentation

platforms (such as Docusaurus or Mintlify) use Markdown as their native authoring format, while adding other features like

versioning, collaboration, i18n, or design themes.

In hindsight, Markdown winning in documentation seems almost inevitable, because it gives you only the minimal primitives you need to write good content (headings, tables, emphasis, and so on) and nothing more. It's therefore independent of any particular output format (html, rss?) or styling (css modules, tailwind typography?) that you might care about. It's naturally upstream of any particular rendering implementation for content.

LLMs understand intent...finally

If Markdown now seems natural (even obvious?) for technical documentation, I wouldn't have predicted a few years ago that it would work for writing code or unit tests -- how could it? That sounds crazy. But then two things happened.

First, we invented LLMs, which are the first technology I've ever used that can actually understand my intent, expressed in the natural language our species evolved to talk about our world, and discuss how we might wish to change it.

They have many limitations and make frequent mistakes, but this is a pretty huge change. Understanding language has been a holy grail for computer science going back to at least the 1960s, and until very recently, none of our best solutions worked very well (as anyone who used Siri or Alexa in the 2010s knows well).

Humans are very quick to update our expectations and goalposts for any new technology. We've discovered a mysterious new way to make sand + electricity think (or at least approximate thinking well enough to be useful). That sounds like crazy science fiction, but it's already baked into my expectations. So now I find it annoying when my coding agent tokens stream too slowly via satellites in space to my airplane's wifi. Humans are demanding tool users, we'll never be satisfied.

Still, it's worth pausing to reflect on just how new and strange this all is. I now regularly blather whole paragraphs of thought into a text box, and my tools (mostly) understand what I mean and want them to do, without ever worrying about precise syntax or typos or semicolon placement. That's crazy! We dreamed about this and worked on it for many decades (how many PhD theses?) and it never really worked, until it strangely started to work a few years ago for reasons I still don't fully understand.

The second thing that happened is that Gruber's Markdown format was so good that humans churned out tons of content in Markdown (especially about code), and then LLMs trained on it.

So Markdown (perhaps with a bit of XML sprinkled in) is already the lingua franca of LLMs -- they've been trained on tons of it, it's what they want to speak. They also probably read all of our Reddit and 4chan posts, but Markdown was where a lot of the good stuff was. A bit of authoring friction and structure is a nice quality filter.

I started this blog because I think of LLMs as fuzzy microprocessors, and wanted to explore what this might mean.

Programming is evolving fast right now, but it's gone through many transitions in the past. Since we find ourselves in a new period of change, it's worth stepping back and asking: what is a "computer program" anyway?

What is a "computer program"?

When I looked it up in a few dictionaries, I found several definitions like this:

a set of instructions that makes a computer do a particular thing

Ok, that makes sense, but what then is a "computer"? When I looked that up, I'd find definitions like this:

A computer is a programmable machine that can perform arithmetic and logical operations

If there's ambiguity (and circularity) in these definitions, it's because the specific form of what a "computer" is has been evolving for the last 180 years or so, and continues to do so.

One of the first ever "computer programmers" was Ada Lovelace, who published the source code in 1843 for computing Bernoulli numbers on Charles Babbage's Analytical Engine. She was already moving up the ladder of abstraction before any compiler (or even the engine) had been fully built.

For Babbage, both the "program" (i.e. predefined instructions) and "input" (data provided at runtime) were encoded on punch cards, which he borrowed from the textile industry, where they were already widely used to control complex mechanical machines like like the Jacquard loom (1804).

Later, Herman Hollerith adapted punch cards for data processing and tabulating in the 1890 United States census, and from there there's a fairly direct line to the origin of IBM, founded in 1911 as the Computing-Tabulating-Recording Company (CTR).

It turns out naming (a century before Air Bed and Breakfast or ChatGPT o3-mini-high) has always been one of the hardest problems in even pre-"computer" science. Some things never change. Nevertheless, CTR became the leading manufacturer of punch card tabulating systems, and rebranded as IBM.

By modern (Turing-Church) standards, we wouldn't consider early IBM tabulating machines as "computers". Imagine tabulating and printing Social Security beneficiary records, in a similar way to how a Jacquard loom was creating complex weaving patterns. Is that a computer? Not quite, but it's already in the ballpark, and pushing on "punchcards but better" would probably get you pretty close.

In any case, most things that are eventually big start out as something small, and eventually IBM invented the System/360, which became the dominant mainframe computer in the 1960s and 70s.

Is this ... a "program"?

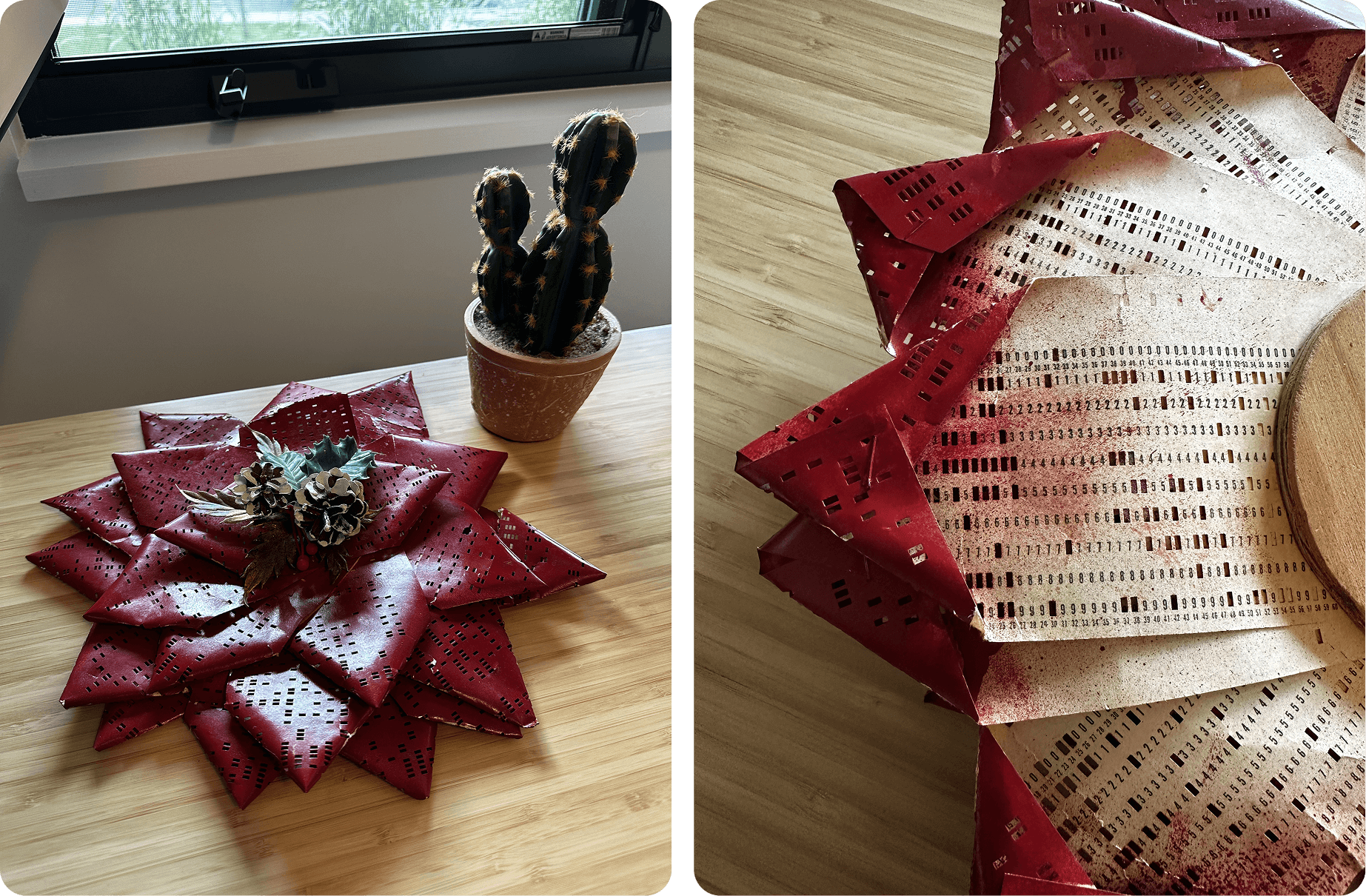

When my mother-in-law was in 9th grade in the late 1960s, her dad (a WWII pilot who then worked at IBM) brought home discarded sets of 80-column Hollerith punch cards. To raise money for her Girl Scout troop, she folded and painted them into Christmas wreaths she sold door-to-door for $1 apiece in St. Louis.

We found one in her basement, and I keep it here on my desk as a physical reminder that "programming" has always been about writing clear instructions, even if the specific form they take has changed many times.

My mother-in-law later did a two-semester Master's course where she programmed a whole operating system on two full boxes of punch cards, which were fed into a card reader for the final exam. She later went on to work at IBM herself, as it progressed through 30 years of computing.

Moving up the ladder of abstraction

The early history of computing is fascinating because so many layers were changing in parallel.

Input devices evolved from punch cards to magnetic tape, disks, and interactive terminals.

Programming evolved from machine-specific binary opcodes to more readable assembly languages with mnemonics like ADD or LOAD.

The C programming language was a big step up, invented at Bell Labs in the early 1970s. It retained the efficiency and control of assembly, but was much easier to read, more expressive, and portable across architectures (via compilers). Unix gave us the philosophy of small, modular tools (composed via pipes), and flavors of Unix or Linux still run on the vast majority of computers in use today, from the cheapest phones to the biggest servers.

We continued evolving up this ladder into higher level languages like C++ (1980s), Python and JavaScript (1990s), Ruby on Rails (2000s), Go and TypeScript (2010s), and so on.

Notice that at each step up the ladder, the lower layers of code are still there, and still important.

The Python interpreter (CPython) is written in C and compiled to machine code. TypeScript compiles to a version of JavaScript (such as ES2022) that's run by an engine like V8 (C++) or Node.js (more C++ and C libraries). If you're currently a COBOL expert (designed in 1959), you likely still have good job prospects today 85 years later.

Programmers were never "replaced" or automated away, even as the ways we wrote instructions were completely transformed many times.

Higher level instructions help programmers express our intent more clearly and efficiently, while new tools (like linters, formatters, IDE autocomplete, test frameworks, etc) help us improve correctness or safety.

Each step up was closer to human-readable intent, and less machine-specific.

Where are we now?

I think we're in the middle of another confusing step up the ladder.

If you're a software engineer (or UX designer, or PM), this is probably very uncomfortable (me too!). There are no experts yet. We're all figuring it out together.

I imagine the folks who had invested multiple semesters in learning to program a whole operating system on a specific punch card or assembly dialect prior to C coming along probably felt the same way?

In the long run, moving up the ladder gives humans collectively more leverage to control our world, but in the short term, if you've invested in expertise on the previous rung, change can be scary or confusing.

I'm trying to force myself to (mostly) program in a Markdown + XML pidgin that has no real tooling or best practices around it yet. If I do that, what breaks? What supporting tools might I need?

Model capabilities have raced ahead of our ability to curate enough high quality context to feed into them. Before we can figure out how one "fuzzy CPU" works, there's already a smarter one with a slightly different instruction set.

But I think Markdown instructions will likely win in some (but not all) areas of software, because they're more universal than Python or Rust code. All tools can consume them. And more people can read and discuss how we want systems to work.

Understanding how computers (clients, servers, databases, and so on) is still a valuable skill. Good design will never go out of style. Clear written communication (and aligning people) will always be useful.

But as a provocation, how might we specify a whole design system in plain text? What might "Unix, but Markdown" or "infrastructure-as-Markdown" look like? What would a plain English linter do for you?

If I write a good enough spec, generating the tests and code is now cheap. But writing a great spec is still hard! How can we make that easier, clearer, safer?

We're standing on the shoulders of giants -- Engelbart, Thompson, Ritchie, McIlroy, Kernighan, Pike, and many others. With Markdown, Gruber and Swartz were way ahead of their time. They created an elegant open format for communicating content across both people and computers, long before anyone anticipated LLMs or even how well Deep Learning would work.

I plan to keep pushing myself to code in Markdown, and see what breaks. I'll share learnings and open source tools in future posts (follow along if you like).

Thanks to Katie Holden and James Dillard for helpful feedback on drafts.